At present, the industry generally believes that applications based on large models are concentrated in two directions: RAG and Agent. Regardless of which application, designing, implementing, and optimizing applications that can fully utilize the potential of large models ( LLM ) requires a lot of effort and expertise. As developers begin to create increasingly complex LLM applications, the development process inevitably becomes more complicated. The potential design space of such a process can be huge and complex. The article " How to Build Apps Based on Large Models " provides an exploratory basic framework for large model application development, which can basically be applied to RAG and Agent. However, is there anything unique about agent-oriented large model application development? Is there a large model application development framework focused on Agent?

So, what is Agent?

1. What is Agent

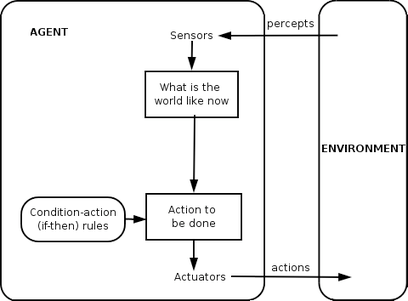

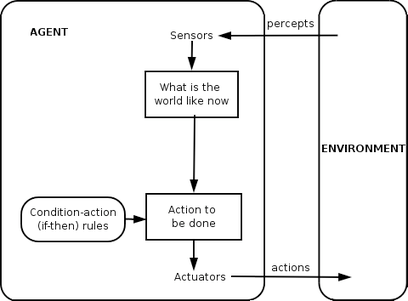

Agent here refers to intelligent entities, which can be traced back to Minsky's book "Society of Mind". In that book, Minsky's definition of Agent is a bit abstract - "an individual in society can find a solution to a problem through negotiation, and this individual is an agent". In the computer field, an agent is an entity that perceives its environment through sensors and acts on the environment through actuators. Therefore, an entity can be defined as a mapping from a perception sequence to entity actions. It is generally believed that an agent refers to a computing entity that resides in a certain environment, can continuously and autonomously play a role, and has characteristics such as autonomy, responsiveness, sociality, and initiative.

Intelligence is an emergent property of the interaction between an agent and its environment.

1.1 Agent Structure and Characteristics

The general structure of Agent is shown in the figure below:

The main features of Agent are:

- Autonomy: It operates without direct intervention from humans or other agents and exercises some control over its own behavior and internal state.

- Social Ability: Ability to interact with other agents (or humans) through some kind of communication. There are three main types of interaction: Cooperation, Coordination, and Negotiation.

- Reactivity: Ability to perceive the environment (which can be the physical world, a user connected via a graphical user interface, a series of other agents, the Internet, or a combination of all of these) and respond promptly to changes in the environment.

- Pro-activeness: Not only can one respond to the environment, but one can also take proactive actions to achieve one's goals.

If we try to formalize the Agent, it might look like this:

Agent = platform + agent program

platform = computing device + sensor + action

agent program is a proper subset of agent function

1.2 Agents in the Large Model Domain

In the field of large models, large models replace the rule engine and knowledge base in traditional agents. Agents provide and seek dialogue channels for reasoning, observation, criticism, and verification. In particular, when the correct prompts and reasoning settings are configured, a single LLM can display a wide range of functions. Dialogues between agents with different configurations can help combine these wide range of LLM functions in a modular and complementary way.

Developers can easily and quickly create agents with different roles, for example, use agents to write code, execute code, connect human feedback, verify output, etc. The agent's backend can also be easily extended to allow more customized behaviors by selecting and configuring a subset of built-in functions.

2. What is Multi-Agent

Multi-Agent (multi-agent system) refers to a group system composed of multiple autonomous individuals, whose goal is to communicate and interact with each other through mutual information.

Generally, Multi-Agent is composed of a series of interacting agents and their corresponding organizational rules and information interaction protocols. The internal agents complete a large amount of complex work that a single agent cannot accomplish through mutual communication, cooperation, competition, etc., and it is a "system of systems".

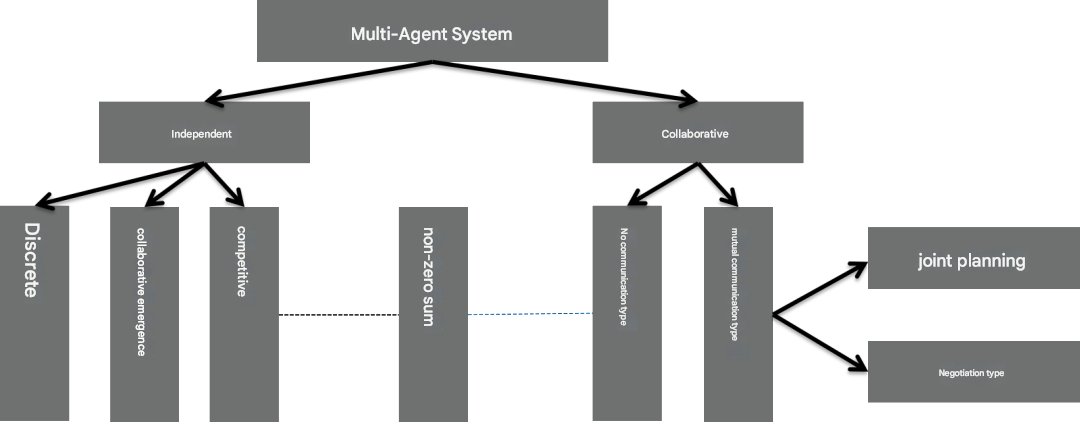

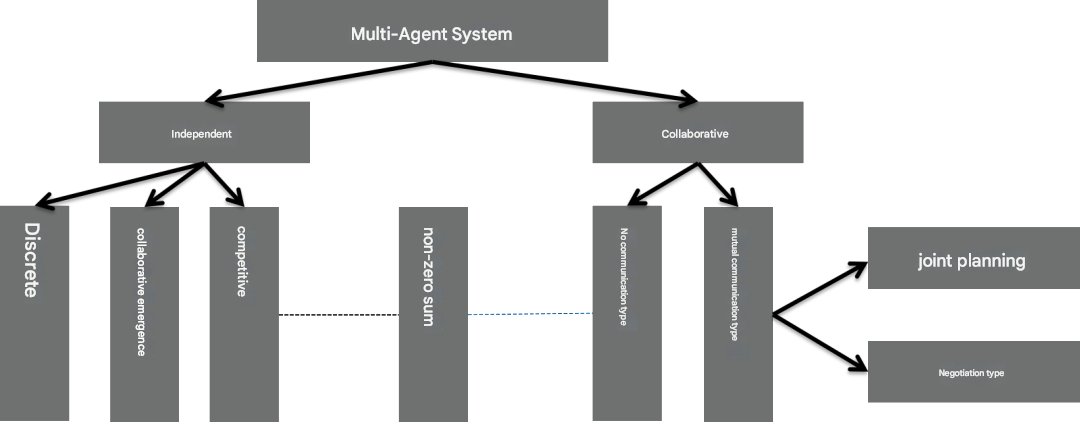

2.1 Multi-Agent System Classification and Characteristics

Multi-Agent Systems (MAS) can be mainly divided into the following categories:

The main features of the Multi-Agent system are as follows:

- Autonomy. In a Multi-Agent system, each agent can manage its own behavior and cooperate or compete autonomously.

- Fault tolerance. Agents can work together to form a cooperative system to achieve independent or common goals. If some agents fail, other agents will autonomously adapt to the new environment and continue to work, without causing the entire system to fail.

- Flexibility and scalability. The Multi-Agent system itself adopts a distributed design, and the Agent has the characteristics of high cohesion and low coupling, which makes the system extremely scalable.

- Collaboration capability. The Multi-Agent system is a distributed system where agents can collaborate with each other through appropriate strategies to achieve global goals.

2.2 Multi-Agent in Large Model Domains

Specifically, in large model-based application areas, LLM has been shown to be able to solve complex tasks when they are decomposed into simpler subtasks. Multi-agent communication and collaboration can achieve this subtask decomposition and integration through the intuitive way of "dialogue".

In order to make the large model-based agent suitable for multi-agent dialogue, each agent can have a conversation, they can receive, respond and respond to messages. When configured correctly, the agent can automatically have multiple conversations with other agents, or request human input in certain conversation turns, thus forming RLHF through human feedback. The conversational agent design takes advantage of the powerful ability of LLM to obtain feedback and make progress through chat, and also allows the functions of LLM to be combined in a modular way.

3. Common Agent and Multi-Agent Systems Based on Large Models

3.1 Single Agent System

Common single-agent systems based on large models include:

- AutoGPT: AutoGPT is an open source implementation of an AI agent that tries to automatically achieve a given goal. It follows the single-agent paradigm, uses many useful tools to enhance the AI model, and does not support Multi-Agent collaboration.

- ChatGPT + (code interpreter or plugin): ChatGPT is a conversational AI Agent that can now be used with a code interpreter or plugin. The code interpreter enables ChatGPT to execute code, while the plugin enhances ChatGPT with management tools.

- LangChain Agent: LangChain is a general framework for developing LLM-based applications. LangChain has various types of agents, and ReAct Agent is a famous example. All LangChain agents follow the single-agent paradigm and are not designed for communication and collaboration.

Transformers Agent: Transformers Agent is an experimental natural language API built on the Transformer repository . It includes a set of curated tools and an agent to interpret natural language and use these tools. Similar to AutoGPT, it follows a single-agent paradigm and does not support collaboration between agents.

4. Multi-Agent-based LLM application development framework: Autogen

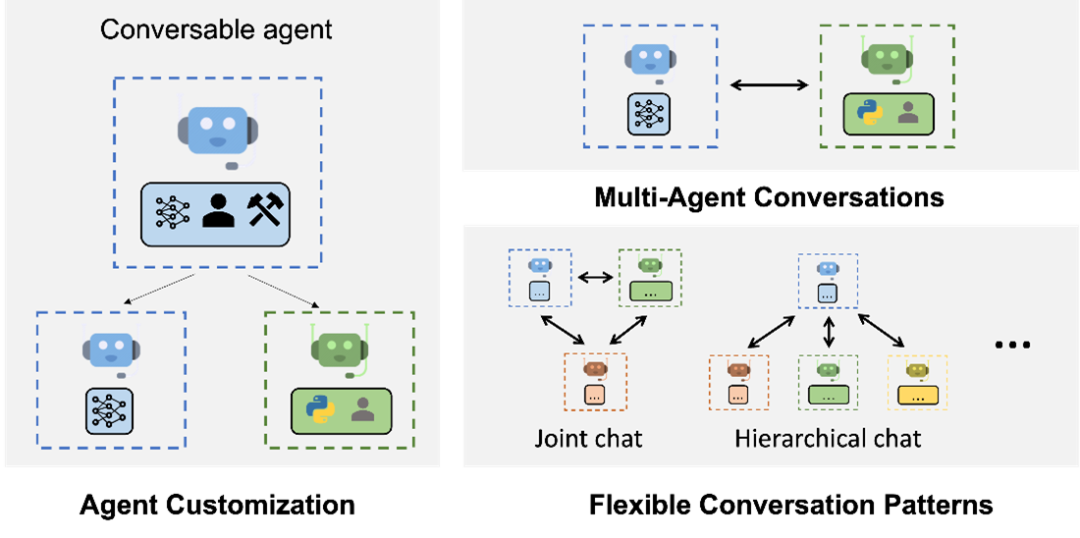

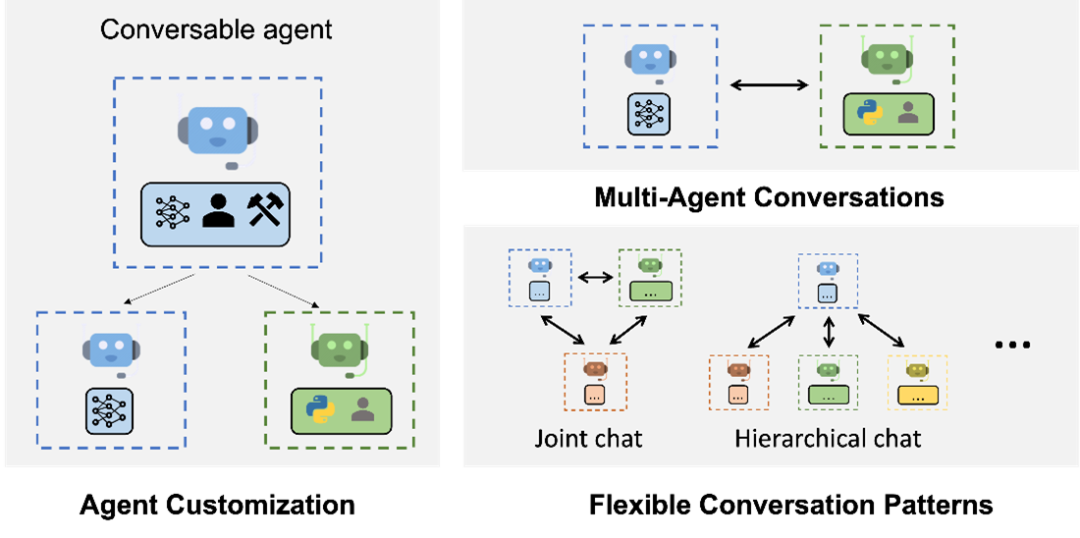

AutoGen is a development framework for simplifying the orchestration, optimization, and automation of LLM workflows. It provides customizable and conversational agents that leverage the strongest features of LLM, such as GPT-4, while addressing their limitations by integrating with people and tools and conducting conversations between multiple agents through automated chat.

4.1 Typical Examples of Autogen

Autogen uses Multi-Agent sessions to enable complex LLM-based workflows. A typical example is as follows:

The left picture shows the customizable Agent generated by AutoGen, which can be based on LLM, tools, people, or even a combination of them. The upper right picture shows that the Agent can solve tasks through dialogue, and the lower right picture shows that Autogen supports many additional complex dialogue modes.

4.2 General usage of Autogen

Using AutoGen, building a complex Multi-Agent conversational system boils down to:

- Define a set of Agents with specialized functions and roles.

- Define the interaction behavior between agents, for example, what one agent should reply when it receives a message from another agent.

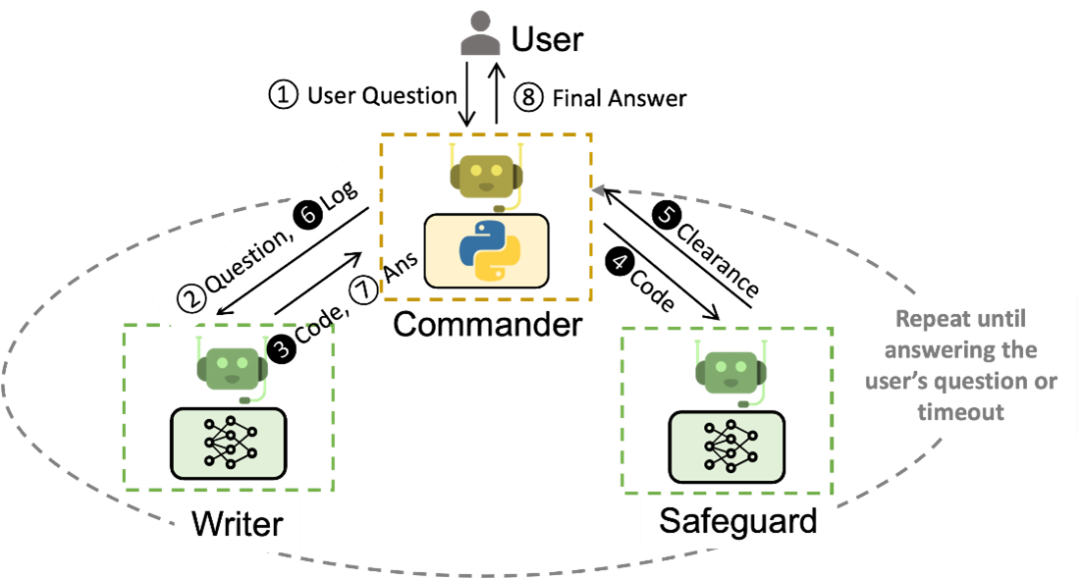

Both steps are modular, making these agents reusable and composable. For example, to build a code-based question-answering system, you can design agents and their interactions so that such a system can reduce the number of manual interactions required for the application. A workflow for solving problems in code is shown in the figure below:

Commander receives questions from users and coordinates with writer and safeguard. Writer writes code and interprets it, tguard ensures safety, and commander executes the code. If a problem occurs, the process can be repeated until the problem is solved.

5. Autogen’s core feature: customizable Agent

Agents in AutoGen have functionality enabled by LLM, humans, tools, or a mix of these elements. For example:

- The use and roles of LLMs in Agents can be easily configured through advanced reasoning features (automatically solving complex tasks through group chat).

- Artificial intelligence and supervision can be achieved through agents with different levels and modes of involvement, for example, automated task solving using GPT-4 + multiple human users.

- Agent has native support for LLM-driven code/function execution, e.g., automatic problem solving through code generation, execution and debugging, using the provided tools as functions.

5.1 Assistant Agent

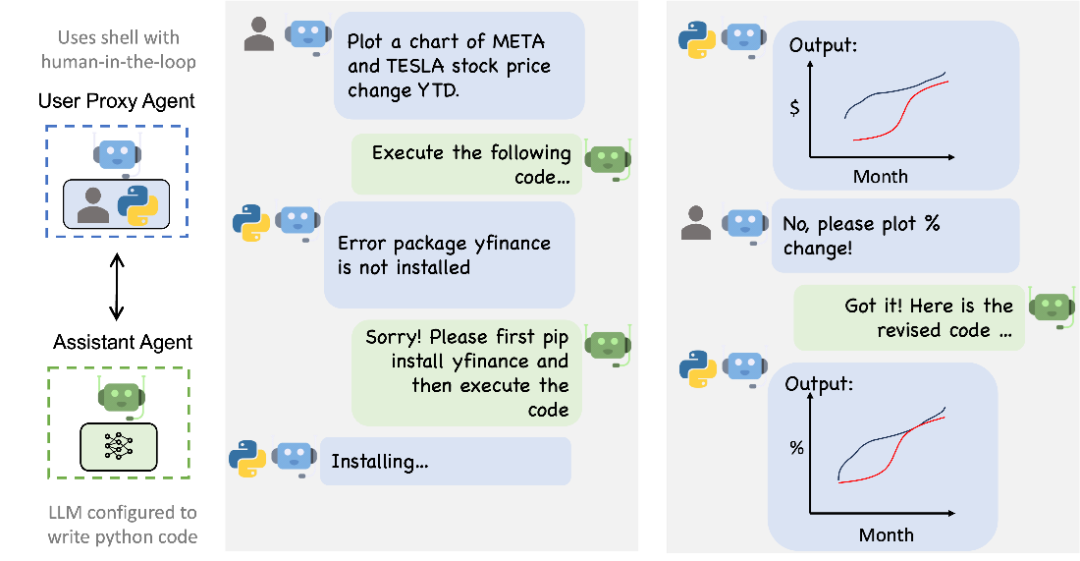

A simple way to use AutoGen Assistant Agent is to invoke automated chats between Assistant Agent and User Agent. It is easy to build an enhanced version of ChatGPT + Code Interpreter + Plugin (as shown below) with customizable automation capabilities that can be used in custom environments and embedded into larger systems.

In the figure above, Assistant Agent plays the role of an AI assistant, such as Bing Chat. User Agent plays the role of a user and simulates the user's behavior, such as code execution. AutoGen automates the chat between two agents while allowing for human feedback or intervention. User Agents interact seamlessly with humans and use tools when appropriate.

5.2 Multi-Agent Session

An agent-conversation-centric design has many benefits, including:

- Naturally handles ambiguity, feedback, progress, and collaboration.

- Enables efficient coding-related tasks such as using tools through back-and-forth troubleshooting.

- Allow users to seamlessly opt-in or opt-out via the chat agent.

- Achieve collective goals through collaboration among multiple experts.

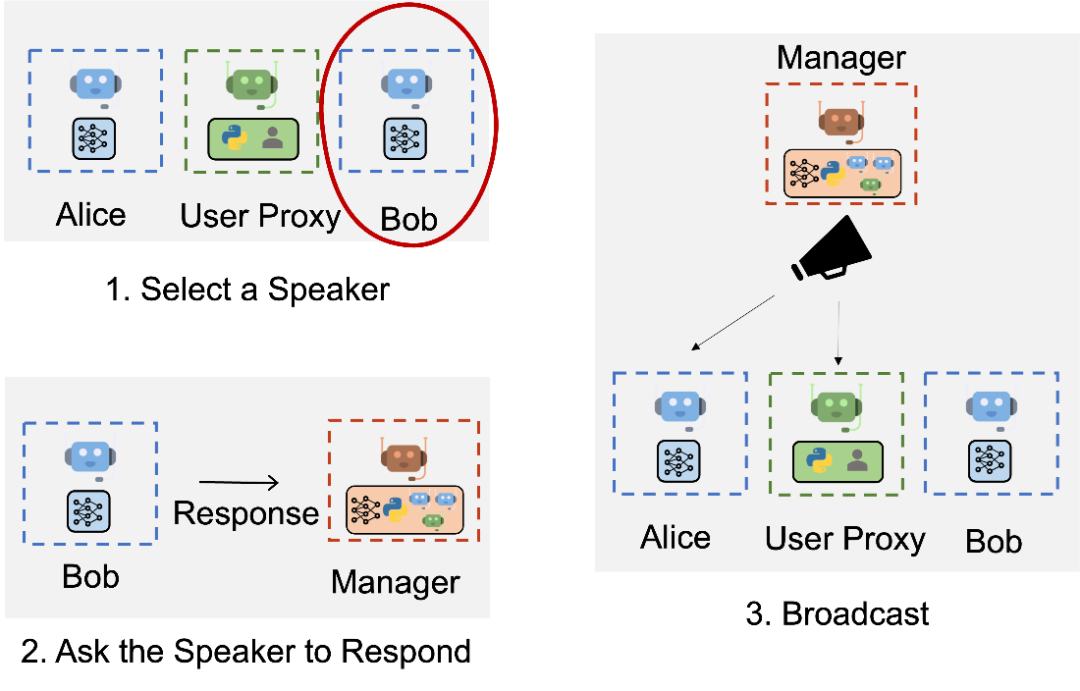

AutoGen supports automatic chat and diverse communication modes, making it easy to orchestrate complex, dynamic workflows and experimental versatility. In the figure below, a special agent called "GroupChatManager" is used to support group chats between multiple agents.

The GroupChatManager is a special agent that repeats the following three steps: select a speaker (in this case, Bob), ask the speaker to respond, and broadcast the selected speaker's message to all other agents.

In summary, AutoGen is designed as a general infrastructure for building LLM applications. Its dialogue mode supports almost all mode types of existing LLM systems. In "static" mode, the topology of the Agent remains unchanged regardless of the input. AutoGen allows flexible dialogue modes, including static and dynamic modes that can be customized according to different application needs. Its Multi-Agent system can execute LLM-generated code, allowing human participation in the system execution process.

6. Autogen Usage Examples

Autogen provides many interesting examples on github. Here, we take https://github.com/microsoft/autogen/blob/main/notebook/agentchat human feedback.ipynb as an example to briefly introduce how to use Autogen to generate application examples based on Multi-Agent conversations - code generation, execution, debugging, and human feedback task solving.

6.1 Environment Setup

AutoGen requires Python version greater than 3.8, which can be installed as follows:

pip install pyautogen

With just a few lines of code, you can quickly implement powerful experiences:

javascript

import autogen

config_list = autogen.config_list_from_json("OAI_CONFIG_LIST")

javascript

config_list The references are as follows:

config_list = [

{

'model': 'gpt-4',

'api_key': '<your OpenAI API key here>',

}, # OpenAI API endpoint for gpt-4

{

'model': 'gpt-4',

'api_key': '<your first Azure OpenAI API key here>',

'api_base': '<your first Azure OpenAI API base here>',

'api_type': 'azure',

'api_version': '2023-06-01-preview',

}, # Azure OpenAI API endpoint for gpt-4

{

'model': 'gpt-4',

'api_key': '<your second Azure OpenAI API key here>',

'api_base': '<your second Azure OpenAI API base here>',

'api_type': 'azure',

'api_version': '2023-06-01-preview',

}, # another Azure OpenAI API endpoint for gpt-4

{

'model': 'gpt-3.5-turbo',

'api_key': '<your OpenAI API key here>',

}, # OpenAI API endpoint for gpt-3.5-turbo

{

'model': 'gpt-3.5-turbo',

'api_key': '<your first Azure OpenAI API key here>',

'api_base': '<your first Azure OpenAI API base here>',

'api_type': 'azure',

'api_version': '2023-06-01-preview',

}, # Azure OpenAI API endpoint for gpt-3.5-turbo

{

'model': 'gpt-3.5-turbo',

'api_key': '<your second Azure OpenAI API key here>',

'api_base': '<your second Azure OpenAI API base here>',

'api_type': 'azure',

'api_version': '2023-06-01-preview',

}, # another Azure OpenAI API endpoint for gpt-3.5-turbo

]

6.2 Creation of Assistant Agent and User Agent

javascript

# create an AssistantAgent instance named "assistant"

assistant = autogen.AssistantAgent(

name="assistant",

llm_config={

"seed": 41,

"config_list": config_list,

}

)

# create a UserProxyAgent instance named "user_proxy"

user_proxy = autogen.UserProxyAgent(

name="user_proxy",

human_input_mode="ALWAYS",

is_termination_msg=lambda x: x.get("content", "").rstrip().endswith("TERMINATE"),

)

# the purpose of the following line is to log the conversation history

autogen.ChatCompletion.start_logging()

6.3 Executing a Task

Call the initiate_chat() method of the User Agent to initiate the conversation. When running the code below, after receiving the message from the Assistant Agent, the system will prompt the user to provide feedback. If the user does not provide any feedback (just press the Enter key), the User Agent will try to execute the code suggested by the Assistant Agent on behalf of the user, and terminate when the Assistant Agent sends a "terminate" signal at the end of the message.

javascript

math_problem_to_solve = """

Find $a + b + c$, given that $x+y \\neq -1$ and

\\begin{align}

ax + by + c & = x + 7,\\

a + bx + cy & = 2x + 6y,\\

ay + b + cx & = 4x + y.

\\end{align}.

"""

# the assistant receives a message from the user, which contains the task description

user_proxy.initiate_chat(assistant, message=math_problem_to_solve)

The user can provide feedback at each step. The results and error messages of the execution are returned to the assistant, and the assistant can modify the code based on the feedback. Finally, the task is completed and the assistant sends a "TERMINATE" signal. The user eventually skips the feedback and the conversation ends.

After the conversation ends, you can save the conversation log between the two Agents through autogen.ChatCompletion.logged_history.

javascript

json.dump(autogen.ChatCompletion.logged_history, open("conversations.json", "w"), indent=2)

This example demonstrates how to use AssistantAgent and UserProxyAgent to solve a challenging math problem. The AssistantAgent here is an LLM-based Agent that can write Python code to perform a given task from the user. UserProxyAgent is another Agent that acts as a proxy for the user to execute the code written by AssistantAgent. By setting the human input mode correctly, UserProxyAgent can also prompt the user to provide feedback to AssistantAgent. For example, when human input mode is set to "ALWAYS", UserProxyAgent will always prompt the user for feedback. When user feedback is provided, UserProxyAgent will pass the feedback directly to AssistantAgent. When no user feedback is provided, UserProxyAgent will execute the code written by AssistantAgent and return the execution result (success or failure and corresponding output) to AssistantAgent.

7. Summary

Agent is an important program form that actively interacts with large models, while Multi-Agent is a system mechanism for multiple agents to use large models to complete complex tasks. Microsoft's AutoGen is an open source, community-driven, multi-agent conversation-oriented project that is still under active development. AutoGen aims to provide developers with an effective and easy-to-use framework to build the next generation of applications, and has demonstrated good opportunities for building creative applications, providing a broad space for innovation.