1. Introduction to AI Agent (LLM Agent)

1.1. Terminology

- Agent: "Agent" usually refers to the performance of intentional actions. In the field of philosophy, agents can be people, animals, or even autonomous concepts or entities.

- AI Agent:AI Agent ( Artificial Intelligence Agent) is an intelligent entity that can perceive the environment, make decisions and perform actions.

- RPA:RPA (Robotic Process Automation) is a software automation technology. RPA automates business processes by imitating human manual operations on computers, such as opening websites, clicking mice, keyboard input, etc. RPA systems can automatically handle a large number of repetitive, rule-based workflow tasks, such as paper document input, document ticket verification, data extraction from emails and files, cross-system data migration , and automated IT application oper

- ations in banks. The main advantages of RPA include reduced labor costs, increased productivity, low error rates, monitorable operations, and short development cycles. It can play an important role in many fields such as finance, office automation, and IT process automation.

- Copilot: That is, the "co-pilot" of the airplane. Here Copilot refers to relying on the underlying large language model ( LLM ). Users only need to say a few words and make instructions, and it can create text and other content similar to human writing.

- LangChain:LangChain is a powerful framework designed to help developers build end-to-end applications using language models. It provides a set of tools, components, and interfaces that simplify the process of creating applications powered by large language models (LLMs) and chat models. LangChain is a language model integration framework whose use cases roughly overlap with those of language models, including document analysis and summarization, chatbots, and code analysis .

- LLM: A Large Language Model (LLM) is an artificial intelligence (AI) algorithm that uses deep learning techniques and massive large datasets to understand, summarize, generate, and predict new content.

- Sensory Memory:Perceptual memory is the first stage of information processing, which involves the short-term storage of information received through the senses. Perceptual memory usually only lasts a few hundred milliseconds to a few seconds. Just like when you see a beautiful landscape photo, perceptual memory is the brain's short-term storage of the information just received through the senses. For example, after you close your eyes, you can still briefly "see" the colors and shapes of the photo in your mind. This is perceptual memory at work.

- Short-term memory:Short-term memory is like your mental workbench, which can temporarily store and process small amounts of information. For example, when you try to remember a phone number, you might repeat the number until you dial it. This is short-term memory at work. All in-context learning uses the model's short-term memory to learn.

- Long-term memory:Long-term memory is like a large warehouse that can store our experience, knowledge and skills, and this storage time can be very long, even a lifetime. For example, if you learn the skill of riding a bicycle, even if you don’t ride it for many years, you still remember how to ride it. This is long-term memory. Agents are generally implemented through external vector storage and fast retrieval.

- Memory Stream: "Memory" stores the agent's past observations, thoughts, and action sequences. Just as the human brain relies on a memory system to retroactively use previous experiences to develop strategies and make decisions, agents also need specific memory mechanisms to ensure that they can handle a series of continuous tasks proficiently. + MRKL (Modular Reasoning, Knowledge and Language): MRKL can be understood as a way to build AI, a neural symbolic structure for autonomous agents, which treats reasoning, knowledge understanding, and language capabilities as different modules. Just like building blocks, each building block represents an ability of AI, and when combined together, AI can perform complex thinking and communication.

- TALM(Tool Augmented Language Models):TOOL enhanced language model refers to a language processing model enhanced by tools or technologies, usually achieved through fine-tuning. For example, an AI chatbot can answer questions or provide information more accurately by accessing a search engine or other database .

- Subgoal and decomposition: When solving problems, agents often break down a big goal into several small goals (sub-goals) to achieve efficient processing of complex tasks. For example, to prepare a dinner, you may need to go shopping first (sub-goal 1), then prepare the ingredients (sub-goal 2), and finally cook (sub-goal 3).

- Reflection and refinement:Agents can self-criticize and self-reflect on historical actions, learn from mistakes, and make improvements for future steps, thereby improving the quality of the final result. Just like after writing an article, you review and revise grammatical errors or unclear expressions to make the article more perfect.

- Chain-of-thought, CoT:has become a standard prompting technique to improve the performance of models in complex tasks. Models are asked to "think step by step" to break down difficult tasks into smaller and simpler steps. Thought chaining turns large tasks into multiple manageable tasks and helps people understand the model's thinking process. Thought chaining is the logical reasoning process when solving problems. For example, if you want to find out why the sky is blue, you might think: "Light is made up of different colors... Blue light has short wavelengths and is easily scattered by the atmosphere... So the sky looks blue. + Tree of Thoughts (ToT): Expands the thought chain by exploring multiple reasoning possibilities at each step of the task. It first breaks down the problem into multiple thinking steps and generates multiple ideas in each step, creating a tree-like structure. The search process can be BFS (breadth-first search) or DFS (depth-first search). Thinking Village is a graphical thought chain that looks like a big tree, with each branch representing a thinking direction or idea, which can help us organize and visualize complex thinking processes.

- Self Reflection: Self-reflection is deep thinking and analysis of your own actions, thoughts, or emotions. Like at the end of the day, think back on what you did and evaluate what you did well and what you need to improve.

- ReAct: Combines the separate behavior and language spaces in the task, so that the reasoning and action of the large model are integrated. This mode helps the large model interact with the environment (for example, using the Wikipedia search API ) and leave traces of reasoning in natural language. Mainly includes: Thought: Action\Observation.

- Reflexion: A framework that gives AI agents dynamic memory and self-reflection to improve their reasoning ability. It follows the settings in ReAct and provides simple binary rewards. After each action, the AI agent calculates a heuristic function and decides whether to reset the environment to start a new trial based on the result of self-reflection. This heuristic function can determine whether the current path is inefficient (taking too long without success) or contains hallucinations (encountering a series of identical actions in the environment that lead to the same observations), and terminates the function in these two cases.

- Self-ask: Self-ask may refer to the AI system asking questions to guide its thinking process when dealing with problems. This is similar to when humans face a problem, they ask themselves: "What should I do next?" to promote the problem-solving process. + Chain of Hindsight: By explicitly showing the model a series of past output results, the model is encouraged to improve its own output results so that the next predicted action achieves better results than the previous trial. Algorithm Distillation applies the same concept to cross-set trajectories in reinforcement learning tasks.

1.2. The meaning of the word Agent and what is Agent

1.2.1 Origin of Agent

Many people may wonder, Agent seems to be not that far from LLM, so why is Agent so popular recently, and why is it not called LLM-Application or other words? This has to start with the origin of Agent, because Agent is a very old term, and can even be traced back to the remarks of Aristotle and Hume. In a philosophical sense, "agent" refers to an entity with the ability to act, and the word "agent" refers to the exercise or manifestation of this ability. In a narrow sense, "agent" usually refers to the manifestation of intentional action; accordingly, the word "agent" refers to an entity with desires, beliefs, intentions and the ability to act. It should be noted that agents include not only human individuals, but also other entities in the physical and virtual worlds. Importantly, the concept of "agent" involves the autonomy of individuals, giving them the ability to exercise their will, make choices and take actions, rather than passively responding to external stimuli.

It may be surprising that before the mid-to-late 1980s, researchers in the mainstream artificial intelligence community paid relatively little attention to the concept of agent. However, since then, the interest in this topic has greatly increased in the computer science and artificial intelligence community. As Wooldridge et al. put it, we can define artificial intelligence as "a subfield of computer science that seeks to design and build computer-based agents that exhibit various aspects of intelligent behavior." Therefore, we can regard agent as the core concept of artificial intelligence. When the concept of agent was introduced into the field of artificial intelligence, its meaning changed a bit. In the field of philosophy, agent can be a person, an animal, or even a concept or entity with autonomy. However, in the field of artificial intelligence, agent is a computational entity. Because concepts such as consciousness and desire seem to have metaphysical properties for computational entities, and we can only observe the behavior of machines, many artificial intelligence researchers, including Alan Turing, suggest temporarily putting aside the question of whether agents are "really" thinking or whether they really have "thoughts". Instead, researchers adopt other properties to help describe agents, such as autonomy, responsiveness, initiative, and sociability. Some researchers also believe that intelligence is "seeing people's eyes"; it is not an innate, isolated attribute. In essence, AI Agent is not the same as Philosophy Agent; on the contrary, it is the concretization of the philosophical concept of Agent in the field of artificial intelligence.

There is no unified name for AI Agent now, such as "AI Agent", "Intelligent Agent", "Intelligent Body" and so on. We can learn about what AI Agent is, as well as its technical principles and application scenarios through the following article.

1.2.2 What is AI Agent

AI Agent (Artificial Intelligence Agent) is an intelligent entity that can perceive the environment, make decisions and perform actions. Unlike traditional artificial intelligence, AI Agent has the ability to gradually complete a given goal by thinking independently and calling tools. For example, if you tell AI Agent to help order a takeaway, it can directly call the APP to select the takeaway, and then call the payment program to place the order and pay, without humans having to specify each step of the operation. The concept of Agent was proposed by Minsky in his book "The Society of Thinking" published in 1986. Minsky believed that some individuals in society can find solutions to problems after negotiation, and these individuals are Agents. He also believed that Agents should have social interactivity and intelligence. The concept of Agent was thus introduced into the field of artificial intelligence and computers, and quickly became a research hotspot. However, due to data and computing power limitations, there is a lack of necessary realistic conditions to achieve truly intelligent AI Agents.

The difference between a large language model and an AI Agent is that the AI Agent can think and act independently, and the difference between it and RPA is that it can process unknown environmental information . After the birth of ChatGPT , AI has the ability to have multiple rounds of conversations with humans in a real sense, and can give specific answers and suggestions for corresponding questions. Subsequently, "Copilot" in various fields was launched, such as Microsoft 365 Copilot, Microsoft Security Copilot, GitHub Copilot, Adobe Firefly, etc., making AI an "intelligent co-pilot" in office, coding, design and other scenarios.

The difference between AI Agent and big models is:

- The interaction between the big model and humans is based on prompts. Whether the user prompts are clear and unambiguous will affect the effectiveness of the big model's answers. For example, ChatGPT and these Copilots require clear tasks to get useful answers.

- The AI Agent only needs to be given a goal, and it can think independently and take action based on the goal. It will break down each step of the plan in detail according to the given task, rely on feedback from the outside world and independent thinking, and create prompts for itself to achieve the goal. If Copilot is the "co-pilot", then Agent can be regarded as a primary "pilot".

Compared with traditional RPA, RPA can only process work under given conditions and according to the preset processes in the program. When there is a large amount of unknown information and an unpredictable environment, RPA cannot work. AI Agent can perceive information and make corresponding thoughts and actions by interacting with the environment.

The AI Agents we see often use question-answering robots as the interaction entry point, triggering fully automatic workflows through natural language without human intervention. Since humans are only responsible for sending instructions, they do not participate in the feedback of AI results.

The AI Agents we see often use question-answering robots as the interaction entry point, triggering fully automatic workflows through natural language without human intervention. Since humans are only responsible for sending instructions, they do not participate in the feedback of AI results.

1.2.3 Why do we need AI Agents?

Some disadvantages of LLM:

- It will cause hallucinations

- The results aren't always real

- Limited or no knowledge of current events

- Difficult to cope with complex calculations

- No ability to move

- No long-term memory

For example, if you ask ChatGPT to buy a cup of coffee, the feedback given by ChatGPT is generally something like "coffee cannot be purchased, it is just a text AI assistant". But if you tell the AI Agent tool based on ChatGPT to buy a cup of coffee, it will first break down how to buy a cup of coffee for you and plan several steps such as using an APP to place an order and pay, and then follow these steps to call the APP to select takeout, and then call the payment program to place an order and pay, without humans having to specify each step. This is where AI Agent comes in, it can use external tools to overcome these limitations. What are the tools here? A tool is a plug-in, an integrated API, a code library, etc. that an agent uses to complete a specific task, for example:

- Google Search: Get the latest information

- Python REPL: Executing Code

- Wolfram: Performing Complex Computations

- External API: Get specific information

LangChain provides a general framework to easily call these tools through the instructions of the large language model. We all know that when performing a complex task, we need to consider many factors and split the complex task into small subtasks to execute. The birth of AI Agent is to handle various complex tasks. In terms of the processing flow of complex tasks, AI Agent is mainly divided into two categories: action and planning and execution . In short, AI Agent is a computing body that can automatically think, plan, verify and execute in combination with a large model to complete specific task goals. If the large model is compared to the brain, then AI Agent can be understood as the cerebellum + hands and feet.

1.2.4 Differences between AI Agents and Humans and Other AI Collaboration

AI Agent is more independent than the Copilot mode that is widely used today. Comparing the interaction mode between AI and humans, it has developed from the past embedded tool-type AI (such as Siri) to assistant-type AI. The current various AI Copilots no longer mechanically complete human instructions, but can participate in human workflows, provide suggestions for matters such as writing code, planning activities, optimizing processes, etc., and complete them in collaboration with humans. The work of AI Agent only needs to be given a goal, and it can think independently and take action based on the goal. It will break down the planned steps of each step in detail according to the given task, rely on feedback from the outside world and autonomous thinking, and create prompts for itself to achieve the goal. If Copilot is a "co-pilot", then Agent can be regarded as a primary "main driver".

1.3 AI Agent Case

1.3.1. AI Virtual Town

As Valentine's Day approaches, Isabella, a coffee shop owner living in a town called "Smallville", tries to hold a Valentine's Day party. She invites her bestie Maria to decorate the party together. When Maria learns about such a party, she secretly invites her crush Klaus to go with her... On the same timeline of the town, Tom, who is nearly 60 years old, has a strong interest in the town's upcoming mayoral election. As a married middle-aged man who is particularly concerned about politics, he refuses Isabella's invitation to the Valentine's Day party. The above plot did not happen in the real world, but it is not a fictional plot made up by humans. It comes from a virtual town composed of 25 AI characters. Any events that occur in this town are the result of random generation through interaction between AIs. At present, the town has been running in an orderly manner for two days.

1.3.2. AutoGPT conducts market research

Pretend that you run a shoe company, and give AutoGPT the order to conduct a market survey on waterproof shoes, and then ask it to give the top 5 companies and report the advantages and disadvantages of competitors:

- First, AutoGPT goes directly to Google and searches for the top 5 companies that provide comprehensive reviews of waterproof shoes. Once it finds relevant links, AutoGPT asks itself some questions, such as "What are the pros and cons of each pair of shoes, what are the pros and cons of each of the top 5 waterproof shoes, the top 5 waterproof shoes for men", etc.

- After that, AutoGPT continues to analyze other websites and Google searches, updating the query until it is satisfied with the results. During this time, AutoGPT can determine which reviews may be fake, so it must verify the reviewer.

- During the execution, AutoGPT even spawned its own sub-agents to perform the task of analyzing the website and finding solutions to the problem, all by itself. The result was a very detailed report of the top 5 waterproof shoe companies, including the pros and cons of each company, and a concise conclusion. The whole process took only 8 minutes and cost 10 cents. There was no optimization at all.

AutoGPT official public demo: https://www.bilibili.com/video/BV1HP411B7cG/?vd_source=6a57ee58a99488dc38bda2374baa1c10

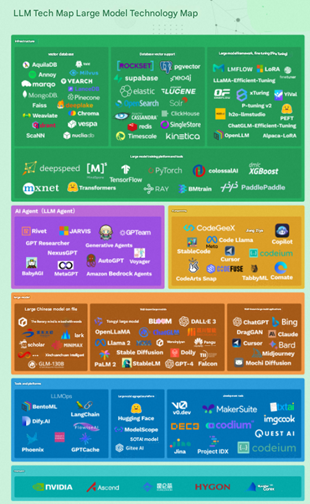

2. AI Agent Framework

The above introduces what AI Agent is and some case demonstrations. The following content will analyze the technology behind AI Agent. An AI Agent system based on a large model can be divided into four components: large model, planning, memory and tool use . In June, Lilian Weng, director of applied research at OpenAI, wrote a blog post, arguing that AI Agent may be the beginning of a new era. She proposed the basic architecture of Agent = LLM + planning skills + memory + tool use, in which LLM plays the role of the "brain" of the Agent, providing reasoning, planning and other capabilities in this system.

2.1. Big Model + Planning: The “brain” of the Agent, which realizes task decomposition through the ability of thought chain

LLM has logical reasoning ability, and Agent can stimulate the logical reasoning ability of LLM. When the model scale is large enough, LLM itself has reasoning ability. In simple reasoning problems, LLM has achieved good ability; but in complex reasoning problems, LLM sometimes still makes mistakes. In fact, many times users cannot get ideal answers through LLM because the prompt is not appropriate enough to stimulate the reasoning ability of LLM itself. By adding prompts to assist in reasoning, the reasoning effect of LLM can be greatly improved. In the test of the paper "Large language models are zero-shot reasoners", after adding "Let's think step by step" when asking questions to LLM, the reasoning accuracy on the mathematical reasoning test set GSM8K increased from 10.4% to 40.7%. Agent, as an intelligent agent, can create appropriate prompts according to the given goals, which can better stimulate the reasoning ability of large models.

Typically, a complex task involves many steps. The AI Agent needs to first break down these steps and plan ahead. The decomposition of tasks can be accomplished in three ways: 1) Enter simple prompts into the big model, such as "the steps of XYZ" or "what are the sub-goals of achieving XYZ?"; 2) Use task-specific instructions, such as asking the big model to "write a story outline" when writing a novel; 3) Provide information manually. The current common technical models include thought chains and thought trees:

Chain of Thoughts:

Chain of Thoughts has become a standard prompting technique for improving model performance on complex tasks. Models are asked to "think step by step," breaking down difficult tasks into smaller, simpler steps. Chain of Thoughts breaks large tasks into multiple manageable tasks and helps people understand the model's thought process.

Take a math problem as an example, standard prompting, model input:

Python

Q: Roger has 5 tennis balls. He buys 2 more cans of tennis balls. Each can has 3 tennis balls. How many tennis balls does he have now?

A: The answer is 11.

Q: The cafeteria has 23 apples. If they used 20 to make lunch and bought 6 more, how many apples do they have?

Model output:

A: The answer is 27.

We can see that the model cannot give the correct answer. But if we give the model some ideas about how to solve the problem, just like when we take a math test, we will write down the problem-solving process and then get the final answer, otherwise we will not get any points. This is what CoT does. The example is as follows: CoT Prompting, model input:

Python

Q: Roger has 5 tennis balls. He buys 2 more cans of tennis balls. Each can has 3 tennis balls. How many tennis balls does he have now?

A: TRoger started with 5 balls. 2 cans of 3 tennis balls each is 6 tennis balls. 5 + 6 = 11. The answer is 11.

Q: The cafeteria has 23 apples. If they used 20 to make lunch and bought 6 more, how many apples do they have?

Model output:

A: The cafeteria had 23 apples originally. They used 20 to make lunch. So they had 23 - 20 = 3. They bought 6 more apples, so they have 3 + 6 = 9. The answer is 9.

As you can see, for similar arithmetic problems, the thinking chain prompt will automatically give the reasoning steps before giving the answer. The thinking chain prompt is to decompose a multi-step reasoning problem into many intermediate steps, assign more calculations, generate more tokens, and then splice these answers together to solve .

Tree of Thoughts

Tree of Thoughts expands the chain of thoughts by exploring multiple reasoning possibilities at each step of the task. It first breaks down the problem into multiple thinking steps and generates multiple ideas in each step, creating a tree structure. The search process can be BFS (breadth first search) or DFS (depth first search). ToT does 4 things: thought decomposition, thought generator, state evaluator, and search algorithm .

An example of ToT Prompt is as follows:

Python

Suppose three different experts answer this question. All experts write down the first step they take to think about this question and share it with everyone. Then, all experts write down the next step they take to think about this question and share it with everyone. And so on, until all experts have written down all the steps they think about. As soon as everyone finds that an expert's steps are wrong, let this expert leave. Please ask...

On the other hand, trial and error and error correction are inevitable and crucial steps in real-world task decision-making. Self-reflection helps AI agents improve past action decisions, correct previous mistakes, and thus continuously improve. Current technologies include ReAct, Reflexion, Chain of Hindsight, etc.

ReAct(!)

ReAct: Combines the separate behavior and language spaces in a task, thus integrating the reasoning and actions of a large model. This mode helps large models interact with the environment (for example, using the Wikipedia search API) and leave traces of their reasoning in natural language.

React paper "ReAct: Synergizing Reasoning and Acting in Language Models": https://react-lm.github.io/

Reflexion

Reflexion: A framework that gives AI agents dynamic memory and self-reflection to improve their reasoning ability. It follows the settings in ReAct and provides simple binary rewards. After each action, the AI agent calculates a heuristic function and decides whether to reset the environment to start a new trial based on the result of self-reflection. This heuristic function can determine whether the current path is inefficient (taking too long without success) or contains hallucinations (encountering a series of identical actions in the environment that lead to the same observations), and terminates the function in both cases.

2.2. Memory: More memory with limited context length

The memory module is responsible for storing information, including past interactions, learned knowledge, and even temporary task information . For an intelligent agent, an effective memory mechanism can ensure that it can call on past experience and knowledge when faced with new or complex situations. For example, a chatbot with memory function can remember user preferences or previous conversation content, thereby providing a more personalized and coherent communication experience.

The input to the AI agent system will become the memory of the system, which can be mapped one-to-one with the human memory mode . Memory can be defined as the process of acquiring, storing, retaining and subsequently retrieving information. There are many types of memory in the human brain, such as sensory memory, short-term memory and long-term memory. For the AI Agent system, the content generated by the user during the interaction with it can be considered as the memory of the Agent, which can correspond to the human memory mode. Sensory memory is the original input for learning embedding representation, including text, images or other modalities; short-term memory is the context, which is limited by the limited context window length; long-term memory can be considered as an external vector database that the Agent needs to query when working , which can be accessed through fast retrieval. At present, Agent mainly uses external long-term memory to complete many complex tasks, such as reading PDFs, searching for real-time news online, etc. Tasks and results will be stored in the memory module. When the information is called, the information stored in the memory will be returned to the dialogue with the user, thereby creating a closer context environment.

In order to solve the limitation of limited memory time, external memory is usually used. A common practice is to save the embedded representation of information into a vector storage database that can support fast maximum inner product search (MIPS). Vector databases solve the storage, retrieval, and matching problems of massive knowledge in large models by converting data into vector storage . Vectors are a common data form for AI to understand the world. Large models require a large amount of data for training to obtain rich semantic and contextual information, which leads to an exponential growth in the amount of data. Vector databases use the Embedding method in artificial intelligence to abstract and convert unstructured data such as images, audio and video into multi-dimensional vectors, which can be structured and managed in the vector database, thereby achieving fast and efficient data storage and retrieval processes, giving Agents "long-term memory". At the same time, mapping multimodal data in high-dimensional space to vectors in low-dimensional space can also greatly reduce the cost of storage and computing. The storage cost of vector databases is 2 to 4 orders of magnitude lower than the cost of storing in neural networks.

Embedding technology and vector similarity calculation are the core of vector databases . Embedding technology is a method of converting unstructured data such as images, audio and video into a language that can be recognized by computers. For example, common maps are embeddings of real geography. The information of real geographical terrain is actually far more than three-dimensional, but maps use colors and contour lines to maximize the representation of real geographical information. After converting unstructured data such as text data into vectors through Embedding technology, mathematical methods can be used to calculate the similarity between two vectors to achieve text comparison. The powerful search function of the vector database is achieved based on vector similarity calculation. Through the similarity search feature, approximate matching results are found for similar problems. It is a fuzzy matching search with no standard accurate answer, which can more efficiently support a wider range of application scenarios.

2.3. Tools: Knowing how to use tools makes you more human

A major difference between AI Agent and large models is the ability to use external tools to expand model capabilities . Knowing how to use tools is the most significant and unique thing about humans. Similarly, large models can also be equipped with external tools to enable them to complete tasks that they could not otherwise complete. A major disadvantage of ChatGPT is that its training data only ends at the end of 2021, and it cannot directly answer some newer knowledge content. Although OpenAI later updated the plug-in function for ChatGPT, which can call browser plug-ins to access the latest information, users are required to specify whether plug-ins are needed for questions, and it is impossible to answer completely naturally. AI Agent has the ability to call tools autonomously. After obtaining the work of each subtask, the Agent will determine whether it is necessary to complete the subtask by calling external tools, and after completion, obtain the information returned by the external tool and provide it to LLM to carry out the next subtask. OpenAI also updated the function call function for GPT-4 and GPT-3.5 in June. Developers can now describe functions to these two large models and let the model intelligently choose to output JSON objects containing the parameters for calling these functions. This is a new way to more reliably connect GPT's functions with external tools and APIs, allowing developers to more reliably obtain structured data from the model, providing convenience for AI developers. The way to implement the tool call is to write a large number of tool call data sets to fine-tune the model.

.png)

To sum up, the principles of AI Agent mainly include four capabilities: perception, analysis, decision-making, and execution . These capabilities work together to form the basic working principle of AI Agent. The first is perception capability, which obtains information about the external environment through sensors, so that AI Agent can understand the surrounding situation. The second is analysis capability, which extracts useful features and patterns by analyzing and processing the perceived information. Then there is decision-making capability, where AI Agent makes decisions based on the analysis results and formulates corresponding action plans. Finally, there is execution capability, which converts decisions into specific actions to achieve task completion. These four capabilities work together to enable AI Agent to run and perform tasks efficiently in complex environments.

3. Application progress of AI Agent

3.1. AutoGPT: Driving the Research Boom of AI Agents

AutoGPT brings the concept of AI Agent "out of the circle". In March 2023, developer Significant Ggravitas released the open source project AutoGPT on GitHub, which is driven by GPT-4 and allows AI to act autonomously without the need for user prompts for each operation. Give AutoGPT a goal, and it will be able to autonomously decompose tasks, perform operations, and complete tasks. AutoGPT still has shortcomings such as high cost, slow response, and dead loop bugs. Auto-GPT uses the APIs of GPT-3.5 and GPT-4, and the price of a single token of GPT-4 is 15 times that of GPT-3.5. Assuming that each task requires 20 steps (under ideal conditions), each step will cost 4K tokens of GPT-4 usage, and the average cost of prompt and reply is $0.05 per thousand tokens (because in actual use, replies use far more tokens than prompts). Assuming the exchange rate is 1 USD = 7 RMB, the cost is 20 4 0.05*7=28 RMB. And this is only under ideal conditions. In normal use, there are often tasks that need to be split into dozens or hundreds of steps. At this time, the processing cost of a single task will be unacceptable. Moreover, the response speed of GPT-4 is much slower than that of GPT-3.5, which makes the task processing very slow when there are more steps. And when AutoGPT encounters a step problem that GPT-4 cannot solve, it will fall into an endless loop, repeating meaningless prompts and outputs, causing a lot of resource waste and loss.

3.2. Application in the gaming field: Westworld Town

Stanford Westworld Town created a virtual environment for multiple agents to live in for the first time. In April 2023, researchers at Stanford University published a paper titled "Generative Agents: Interactive Simulation of Human Behavior", which showed a virtual western town composed of generative agents. This is an interactive sandbox environment, in which 25 generative AI agents that can simulate human behavior live. They will take a walk in the park, drink coffee in the cafe, and share the news of the day with colleagues. Even if an agent wants to hold a Valentine's Day queue, these agents will automatically spread the news of the party invitation, make new friends, and ask each other to go to the party together in the next two days. They will also coordinate time with each other and appear at the party together at the right time. This kind of agent has human-like characteristics, independent decision-making and long-term memory, and they are closer to "native AI agents". In this cooperative mode, agents are not only tools to serve humans, they can also establish social relationships with other agents in the digital world.

The architecture of the agents in the Westworld town The memory stream contains a large number of observations and retrieval processes. The memory stream is the core of the architecture of the AI agents in the Westworld town. The agents in the town contain three important basic elements: memory, reflection, and planning, which are slightly adjusted compared to the core components mentioned above. These three basic elements are based on a core: the memory stream. The memory stream stores all the experience records of the agent. It is a list of multiple observations. Each observation contains an event description, creation time, and the timestamp of the last visit. Observations can be the agent's own behavior or behaviors perceived from others. In order to retrieve the most important memories to pass to the language model, the researchers identified three factors that need to be considered in the retrieval process: recency, importance, and relevance. By determining the score of each memory based on these three factors, the memory with the highest weight is finally summed up and passed to the large model as part of the prompt to determine the agent's next move. Reflection and planning are both updated and created based on observations in the memory stream.

3.3. HyperWrite: Launching the first personal AI assistant Agent

HyperWrite launches the first personal AI assistant Agent. On August 3, 2023, artificial intelligence startup HyperWrite officially launched the AI Agent application Personal Assistant, hoping to become a "digital assistant" for humans. As an investor in HyperWrite, Aidan Gomez, co-founder of generative AI startup Cohere, said: "We will begin to see real personal AI assistants for the first time." As a personal assistant Agent, it can help users organize mailboxes and draft replies, help users book air tickets, order takeout, organize suitable resumes on LinkedIn, etc., and seamlessly integrate AI capabilities into users' daily lives and workflows. The tool is currently in the trial stage and is mainly suitable for web browser scenarios.

Personal Assistant can complete designated tasks in the browser autonomously. Personal Assistant now provides services in the form of browser extension plug-ins. Users can start the trial after installing the plug-in and registering an account. Its initial page is similar to a search engine such as New Bing, and only provides a chat box for natural language interaction. After the user enters the goal they want to achieve, the plug-in will create a new browser page and display each step and idea in the form of a sidebar on the page. Taking the goal of "Give me some new ideas about AI Agents in the United States now" as an example, the personal assistant will first conduct relevant searches, then open the relevant article pages to read and summarize the ideas. After completing the reading and summarizing, it will summarize the results and return them to the chat box. The overall time is about 2 minutes. At present, the capabilities of personal AI assistants are still limited, but the potential is promising.

Currently, HyperWrite Personal Assistant is only version 0.01, and its functions are still relatively limited. There are also some errors, and the response process is relatively slow. However, we believe that AI Agent has taken the first step towards the personal consumer field. With the further improvement of large model capabilities in the future and the continuous popularization of computing power infrastructure, the development potential of personal AI assistants is worth looking forward to.

3.4. ModelScopeGPT: A domestic large model calling tool

ModelScopeGPT is an important mapping of Alibaba Cloud's MaaS paradigm at the model usage layer, aiming to establish a large model ecosystem. Alibaba Cloud said that the data set and training scheme for building ModelScopeGPT will be open to the public for developers to call. Developers can combine different large and small models as needed to help developers use large models more, faster, better, and more economically. Currently in the AI developer circle, the MoDa community has become China's number one portal for large models. All model producers can upload their own models, verify the technical capabilities and commercialization models of the models, and collaborate with other community models to jointly explore model application scenarios. ModelScopeGPT realizes the free combination of model productivity, continuing to strengthen Alibaba Cloud's leading position in the construction of a large model ecosystem.

3.5. Inflection AI: High emotional intelligence personal AI --- Pi

Inflection AI launches Pi, a personal AI focusing on emotional companionship. Inflection AI is an artificial intelligence startup founded in 2022. The company's current valuation has exceeded US$4 billion, second only to OpenAI in the field of artificial intelligence. In May 2023, the company launched its personal AI product Pi. Unlike ChatGPT, Pi has never promoted its professionalism and replacement of manual labor. It can't write code or help us produce original content. Unlike the popular general chatbots, Pi can only have friendly conversations, provide concise advice, or even just listen. Its main characteristics are compassion, humble curiosity, humor and innovation, good emotional intelligence, and can provide unlimited knowledge and companionship based on the user's unique interests and needs. Since the development of Pi, Inflection has determined that Pi will serve as personal intelligence (Personal Intelligence), not just a tool to assist people in their work.

The core of Pi is the Inflection-1 large model developed by the company, which has performance comparable to GPT-3.5. Inflection-1 is a large model launched by Inflection AI. According to the company's evaluation tests, Inflection-1's performance in multiple tests such as multi-task language understanding and common sense questions is slightly better than that of commonly used large models such as GPT-3.5 and LLaMA, but it lags behind GPT-3.5 in coding capabilities. However, this is where the company's differentiated competition lies. As an agent that focuses on emotional companionship, Pi does not need to have strong coding and auxiliary work capabilities.

Unlike agents that assist work, Pi can meet more emotional companionship needs. As an AI agent with high emotional intelligence, Pi can communicate with users in a more daily and lifelike language, rather than in the tone of a cold work AI. Pi's replies are very close to life, with a very decent tone, and its concern for your current state and development of events is like a psychologist or your best friend. When Pi replies to questions that may carry negative emotions, it will also avoid using any playful expressions or brisk tones to offend users. It will even use emojis in its replies, making users feel more like they are having a conversation with real humans. Pi can also remember the content of the conversation with the user and get to know the user better over time. The emergence of Pi makes up for the neglect of human emotional desires by traditional artificial intelligence. We believe that there is a large market space for personal AI agents like Pi that can provide emotional value.

3.6. Agent Bench: LLM Agent Capability Evaluation Standard

AgentBench evaluates the ability of LLM as an agent. Commonly used rankings of LLM's agent abilities. A joint team from Tsinghua University proposed the world's first large-model AI agent ability evaluation standard. Although AI agent research is extremely hot, the AI industry lacks a systematic and standardized benchmark to evaluate the intelligence level of LLM as an agent. In August 2023, a research team from Tsinghua University, Ohio State University, and the University of California, Berkeley proposed the first systematic benchmark test, AgentBench, to evaluate the performance of LLM as an agent in various real-world challenges and 8 different environments (such as reasoning and decision-making capabilities). The 8 environments are: operating system, database, knowledge graph , card battle game, housework, lateral thinking puzzles, online shopping, web browsing. Based on these 8 environments, the research team designed different real-world challenges, covering code scenarios and life scenarios, such as using SQL language to extract the required numbers from some tables, playing card games to win, and booking air tickets from the web.

GPT-4 is far ahead in performance, and the open source model is significantly weaker than the closed source model. The researchers selected 25 mainstream large model APIs to evaluate the agent's capabilities, covering closed source models (such as OpenAI's GPT-4, GPT-3.5, etc.) and open source models (LLaMA 2 and Baichuan, etc.). According to the test results, GPT-4 basically occupies a leading position in all environments and is truly the current large model capability boundary. The closed source models Anthropic's Claude and OpenAI's GPT-3.5 have similar levels, while some common open source models such as Vicuna and Dolly have significantly weaker performance evaluations due to their size difference of at least one order of magnitude from the closed source models. We believe that although LLM can achieve basic human-like levels in NLP such as natural language communication, it is still relatively backward in focusing on important agent capabilities such as action effectiveness, context length memory, multi-round dialogue consistency, and code generation and execution. There is still potential for the development of AI Agents based on LLM.

3.7 Application Scenarios of AI Agent in Security Business

Currently in the vertical field of network security , Microsoft Security Copilot is still the main product form, and is still positioned as a "co-pilot". There is no AI Agent in the security business yet, but I believe that there will soon be AI Agents for virus analysis, Red Army AI Agents, Blue Army AI Agents, and so on.

4. Judgment on the future development trend of AI Agent

Based on the current application of AI Agents developed by academia and industry based on LLM, we divide the current AI Agents into two categories:

- Autonomous agents seek to automate complex processes. When given a goal, they can create tasks, complete tasks, create new tasks, re-prioritize the task list, complete new priority tasks, and repeat this process until the goal is achieved. High accuracy is required, so external tools are needed to reduce the negative impact of large model uncertainty.

- Intelligent agent simulation strives to be more human and credible. It is divided into intelligent agents that emphasize emotional intelligence and intelligent agents that emphasize interaction. The latter is often in a multi-agent environment, and may emerge with scenarios and capabilities that exceed the designer's planning. The uncertainty generated by large models becomes an advantage, and diversity makes it likely to become an important part of AIGC.

The survey in the report "Top Ten Trends in AIGC Application Layer" shows that all companies believe that AI Agent is the definite direction of AIGC development. 50% of companies have already piloted AI Agent in a certain work, and another 34% of companies are formulating AI Agent application plans. This report also made two predictions on the development trend of AI Agent: AI Agent makes "human-machine collaboration" a new normal, and individuals and companies enter the era of AI assistants. AI Agent can help future companies build a new normal of intelligent operation with "human-machine collaboration" as the core. AI Agent changes the organizational form of future productivity and fights against organizational entropy increase. In the future, corporate work tasks will become increasingly atomic and fragmented under the boost of AIGC, and complex processes will be infinitely disassembled, and then flexibly arranged and combined. The efficiency and potential of each link will be continuously explored by AI. From the supply side, the efficient collaborative model of "people + AI digital employees" will provide an ideal solution for large companies to fight against organizational entropy increase.

4.1. Autonomous Agents: Automation, a New Round of Productivity Revolution

Autonomous agents strive to automate complex processes. Dai Yusen, managing partner of Zhen Fund, likens the degree of collaboration between AI and humans to different stages of autonomous driving . AI Agent is approximately the L4 stage of autonomous driving, where the Agent completes the task and humans provide external assistance and supervision. Autonomous agents are expected to bring about changes in the interaction mode and business model of the software industry:

- Change in interaction mode: Compared with the past APP/software, people adapt to applications and applications adapt to people. Agent decision/planning/execution and other links require a deeper understanding of user needs and stronger engineering details. For example, Agents often encounter problems such as endless expansion and misunderstanding of output formats during operation. Such problems cannot only be solved by improving the capabilities of large models, but also require the design of Agent architecture and the learning of vertical data.

- Business model change: The change from charging by service content to charging by token requires higher practicality of Agent functions. The capability of the base large model is important, but it can only solve the lower limit problem. In actual enterprise application scenarios, the architecture design, engineering capabilities, and vertical data quality of autonomous intelligent agents are also crucial, and vertical/ middleware players also have opportunities. Accuracy and efficiency are important indicators of autonomous intelligent agents (what decision-making AI is better at doing, which also means lower fault tolerance). Enterprises also have demand for low-threshold customized AGENTs, and players who focus on specific fields/provide AGENT frameworks still have room for development.

Typical representatives:

- AutoGPT

- Code Development GPT Engineer

- GPT Researcher

- Creative ShortGPT+ Multi-agent: AGENT team completes complex development tasks, such as MetaGPT, AutoGEN

4.2. Intelligent agent simulation: anthropomorphism, a new spiritual consumer product

Companion intelligent agents emphasize human characteristics such as emotional intelligence, have "personality", and can remember historical exchanges with users.

- LLM's important breakthrough in natural language understanding makes companion agents technically possible.

- GPT4 has significantly higher emotional intelligence than other previous large models. With the iteration of large model emotional intelligence and the development of multimodal technology, it is expected that more three-dimensional, credible, and companion intelligent agents that can provide higher emotional value will emerge.

Research institutions believe that there is still a lot of room for imagination in the domestic emotional consumption market (the social concept of marriage has changed, the pace of modern work and life has been tense, and the loneliness of the people has increased). Companion intelligent agents may benefit from the dividends of emotional consumption trends and become important AI native applications in the LLM era. Based on the first principle of user companionship needs, we expect that most of the commercial value of companion intelligent agents is concentrated on IP. Based on this, we are more optimistic about the current players with rich IP reserves or who can allow users to customize intelligent agents:

Reference people’s companionship: Stranger social networking and show live streaming are representative applications of online companionship. The core problem of the former is that after establishing a certain emotional connection between users, they will turn to the most commonly used social platform. For the latter, user value will gradually be concentrated on the top anchors rather than the platforms.

- Companionship of reference objects: Consumer goods such as trendy toys have certain companionship attributes, and most of the audience's spending is spent on their favorite IPs. Typical representatives:

- Companion: High emotional intelligence, personality traits, such as Pi

- Platform-based entertainment, such as Character.AI, Glow, etc.

- AI players in the game world, such as Voyager + Smallville, a simulated society similar to Westworld + NetEase's "Against the Water" mobile game, AI NPC improves player experience

- Kunlun Wanwei "Club Koala" virtual world is more credible

4. Conclusion

So far, the discussion in this article has come to a successful conclusion. We have deeply analyzed the overall picture of AI Agent, a cutting-edge technology field. From the clarification of basic concepts, to the detailed deconstruction of technical principles, to the exploration of rich and diverse application scenarios, and to the prospect of its exciting future development trends, each step embodies the infinite vision of the potential of AI Agent technology. Admittedly, many of the projects mentioned in this article are still in the early stages of academic research and practical exploration. The experimental results presented in the form of papers are like bright stars, heralding the infinite possibilities of the future direction of large model technology. These sparks may ignite the prairie fire of AI applications, giving birth to unprecedented new fields and new opportunities, and injecting intelligent vitality into every corner of society.

Looking ahead, AI Agent technology is expected to achieve leapfrog development in the next five years, and its popularity will far exceed expectations, deeply penetrating and reshaping the operating models of thousands of industries. We expect that this technology will not only achieve a qualitative leap in efficiency and bring disruptive changes to traditional industries, but also serve as the core force of innovation-driven development to usher in a new era of intelligent interconnection and efficient collaboration. Let us witness together how AI Agent technology will work with all walks of life to draw vivid and colorful pictures of the intelligent future.

For more high-quality content, please follow the official account: Ting, Artificial Intelligence; it will provide some relevant resources and high-quality articles for free reading.

Reference Links:

1. Detailed description of AI-Agent from Fudan University and Stanford University (Second update) https://www.zhihu.com/tardis/zm/art/657737603?source_id=1003

2.AI Agent, launched! Fudan NLP team published an 86-page long article review, the intelligent agent society is just around the corner https://cloud.tencent.com/developer/article/2351355 https://zhuanlan.zhihu.com/p/656676717

3. AI Agent ignites the AGI era. Ten research reports provide insights into the present and future of AI agents . https://mp.weixin.qq.com/s/oc08ID6FUb85_wfNeRrDtw

4. An article on the analysis framework of Agent products https://mp.weixin.qq.com/s/k0utZChaXQQJza3YCaxJSw

5. "Overview: New Large Language Model-Driven Agents" - 45,000 words of detailed interpretation of Fudan NLP and Mihoyo's latest Agent Survey https://zhuanlan.zhihu.com/p/656676717

6. Stanford's "Virtual Town" is open source: 25 AI agents shine in "Westworld" https://cloud.tencent.com/developer/article/2325908

7.OpenAI demonstrated the official version of AutoGPT on site! It can create, draw, and tweet in one go, and call external applications to complete tasks autonomously https://wallstreetcn.com/articles/3687251

8. Digital employees, super individuals, embodied intelligence, ten major research directions for the future development of AI Agents https://baike.baidu.com/tashuo/browse/content?id=7e0fcb27cb24c9f4478d8de5

9. Nine most popular open source AI Agent frameworks https://www.chatdzq.com/news/1753957671170928641

The AI Agents we see often use question-answering robots as the interaction entry point, triggering fully automatic workflows through natural language without human intervention. Since humans are only responsible for sending instructions, they do not participate in the feedback of AI results.

The AI Agents we see often use question-answering robots as the interaction entry point, triggering fully automatic workflows through natural language without human intervention. Since humans are only responsible for sending instructions, they do not participate in the feedback of AI results.

.png)