Generative AI is entering the agent era, with “agentic AI” or “AI agent ” being the buzzwords right now . The agent architectures and early use cases we see today represent only the beginning of a broader transformation that promises to redefine the human-machine dynamic, with profound implications for enterprise applications and infrastructure .

In today's artificial intelligence field, generative AI is showing its wide application potential, especially in the three core application scenarios of search, synthesis and generation , which have shown strong market adaptability. For example, several companies in Menlo Ventures' portfolio, such as Sana, which focuses on enterprise search, Eve, which serves as a legal research assistant, and Typeface*, which is committed to content generation, each represent initial and groundbreaking explorations in these fields, and the core of these explorations is the few-sample reasoning capability of large language models (LLMs).

However, the future of generative AI extends far beyond these first core applications. While AI that can read and write is impressive enough, even more exciting is AI that can think and act on our behalf. Here, cutting-edge application developers such as Anterior, Sema4, and Cognition are building solutions that can handle workflows that used to require a lot of human involvement.

By introducing new building blocks such as multi-step logic , external storage , and access to third-party tools and APIs, a new generation of AI agents is pushing the boundaries of AI capabilities and driving the automation of end-to-end processes.

As we explore the field of AI agents further, we will elaborate on Menlo Ventures' views on the emerging market. First, we will clarify the definition of agents and the key factors that enable their realization. Then, we will trace the architectural development of the modern AI stack, from simple prompt input, to retrieval-augmented generation (RAG) technology, to mature agent systems. In subsequent articles, we will also explore the profound impact of this paradigm shift on the application and infrastructure levels.

1. Four building blocks of AI agents

Fully autonomous agents are defined by four elements that combine to achieve full agent capabilities: reasoning, external memory, execution, and planning.

Reasoning

At the basic level, agents need to be able to perform effective logical reasoning on unstructured data. Currently, cutting-edge basic models such as Anthropic* and OpenAI have demonstrated extremely high efficiency by incorporating partial world models into the pre-trained weights of large language models (LLMs) to acquire extensive knowledge and basic logical rules.

External Memory

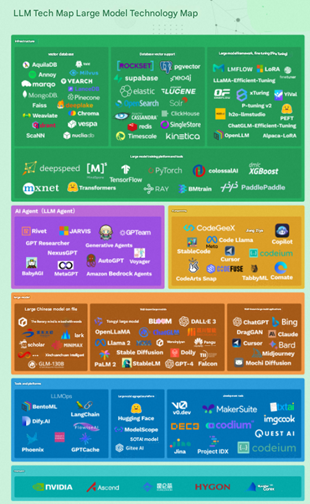

In addition to built-in general knowledge, agents also rely on external information storage to save and call domain-specific knowledge and contextual information required to handle specific problems. This usually relies on advanced vector database technology such as Pinecone*.

Implement

To solve problems more effectively, agents need tools to perform tasks. Many early agent platforms provided tool sets pre-set in the code for agents to choose and use as needed. However, as technology continues to develop, more and more general agent tools have emerged, including web browsing, code parsing, authentication and authorization services, and connectors to enterprise systems (such as CRM and ERP) to perform user interface operations in these systems.

Planning

Instead of simply solving problems by continuously predicting the next token (such as writing an entire article in one go), intelligent agents use a more human-like way of thinking, breaking down complex tasks into smaller subtasks and plans, constantly reflecting on progress, and making necessary adjustments based on actual conditions. This strategic planning capability enables agents to handle complex problems more efficiently and respond flexibly to various challenges.

2. AI Examples from RAG to Autonomous Agents

It is important to note that while future fully autonomous agents may integrate all four core building blocks, current Large Language Model (LLM) applications and agents are not yet at this stage.

For example, the popular Retrieval-Augmented Generation (RAG) architecture is not agentic, but relies on reasoning and external memory as its cornerstone. Some designs, such as OpenAI's structured output function, have supported the use of tools, but the key difference is that these applications still treat LLM as a "tool" for semantic search, synthesis, or generation, and its logical flow is still determined by preset code.

In contrast, agents emerge when LLMs are placed at the heart of an application’s control flow, dynamically deciding what actions to perform, which tools to use, and how to parse and respond to input. In this case, some agents don’t even need to interact with external tools or take actual actions.

At Menlo, we distinguish between three types of agents, each distinguished by its primary use case and the degree of freedom it provides to control the flow of your application.

The most constrained are “decision agents” designs, which use language models to navigate predefined decision trees. “Track agents”, on the other hand, offer more degrees of freedom by setting higher-level goals for agents while constraining the solution space with standard operating procedures (SOPs) and a library of preset “tools”. Finally, at the other end of the spectrum are “general AI agents”, which are almost unconstrained by the data framework and rely entirely on the reasoning power of language models for planning, reflection, and path adjustment, similar to an unfettered for loop.

Next, we’ll dive deeper into five reference architectures and AI agent examples for each agent type.

3. Retrieval-Augmented Generation (RAG)

Establishing a baseline: In today’s AI application space, the Retrieval Augmentation Generation (RAG) architecture has become the standard for many modern AI applications. Taking Sana’s enterprise search function as an example, we can take a closer look at its inner workings.

The entire process begins with loading and converting unstructured files (such as PDFs, slides, text files, etc.), which are usually stored in enterprise data silos such as Google Drive and Notion. Through data preprocessing engines such as Unstructured, these files are converted into a format that can be queried by large language models (LLMs). During this process, the files are "split" into smaller text units for more accurate retrieval. Subsequently, these text units are embedded as vectors and stored in databases such as Pinecone.

When a user asks the AI application a query (e.g., “Please summarize all my meeting notes with Company X”), the system retrieves the most semantically relevant text units to the query and combines them into a “meta-prompt.” This meta-prompt is expanded with the retrieved information and then provided to the LLM. The LLM synthesizes an answer based on this contextual information and returns a concise and to-the-point response to the user.

It is worth noting that the above process only shows a retrieval step containing a single LLM call. In actual applications, the process of AI applications is more complicated and may contain dozens or even hundreds of retrieval steps. These applications usually adopt a "prompt chain" approach, where the output of one retrieval step is used as the input of the next retrieval step, and for different types of tasks, multiple "prompt chains" are executed in parallel. Ultimately, these results are combined to generate the final output.

For example, Legal Research Co-Pilot Eve* might break down a research query about Title VII into separate prompt chains that focus on predetermined subtopics, such as employer background, employment history, Title VII, relevant case law, and supporting evidence for the plaintiff's case. The LLM then runs each prompt chain, generates intermediate outputs for each, and synthesizes the outputs to write a final memorandum.

4. Tool Usage

In the field of artificial intelligence, tool invocation or function execution marks an important step from retrieval-augmented generation (RAG) to agent behavior, adding a new dimension to modern AI architectures.

These tools are essentially predefined code modules designed to perform specific tasks. Common features such as web browsing (such as Browserbase, Tiny Fish), code parsing (such as E2B), and authorization and authentication (such as Anon) have emerged. They give large language models (LLMs) the ability to browse web pages, interact with external systems (such as CRM, ERP), and execute custom code. The system will present the available tools to the LLM, which is responsible for selecting an appropriate tool, formatting the necessary input into structured JSON data, and triggering execution through the API to complete the final operation.

Omni’s Calculations AI feature is a vivid example of this approach, which uses LLM to embed appropriate Excel functions directly into a spreadsheet, which then automatically performs calculations and generates complex query results for the user.

However, while the use of tools is powerful, they are not sufficient by themselves to constitute an "agent". This is because the logical control flow is still predetermined by the application. In the following content, we will dig into true agent design, which allows LLM to dynamically write part or all of the logical control flow.

5. Decisioning Agent

Next, the first type of agent we will discuss in depth is the decision agent, which uses the agent's decision-making ability to guide complex multi-step reasoning processes and make business decisions based on them. Unlike the RAG architecture or tool usage, this new architecture is the first to hand over part of the control logic to a large language model (LLM) instead of hard-coding all the steps in advance. However, it is still at a lower level of the agent's freedom range, because the agent mainly plays the role of a "router" that navigates between decision trees.

Take Anterior (formerly Co:Helm), a company focused on health plan automation that has developed a clinical decision engine to automatically review claim submissions, which traditionally have been done manually by nurses using payer rules that include conditional knowledge, similar to a complex “choose your adventure” game.

Anterior simplifies this cumbersome process. They first transform the payer rules into a directed acyclic graph (DAG) using rule-based scripts and language models. Then, their agents begin traversing this decision tree, using LLMs at each node to evaluate relevant clinical documents based on specific rules. For simpler nodes, this may only involve a basic retrieval augmentation generation (RAG) step. However, Anterior often faces complex tasks that require subchains, where the agent must choose the best path to proceed to the next node. It updates its state with each decision (managing these intermediate results in memory) and continues to advance through the decision tree until the final decision is made.

Anterior is not alone, and other companies are taking this approach. For example, Norm AI is building an AI agent for regulatory compliance, while Parcha is working on building an agent for customer identity verification (KYC).